LARS notes (Part1) (To page9,2 end)

Abstract:

Useful and less greedy version of traditional forward selection methods.

Main property:

Implement the Lasso, lasso Modification: Calculates all possible Lasso estimates for a given problem.

Different version: Another modification efficiently implements forward stagewise linear regression.

A simple approximation for the degree of freedom of a LARS estimate is available, from which we derive a estimate of prediction error. thisi allws a principled choice among the range of possible LARS estimates.

(Not quite understand the final part of the LARS goals.)

LARS relates: classic model-selection method known as "forward selection" or "forward stepwise regression."

Forward Selection

- Given a collection of possible predictors, select the one largest absolute correlation with the response , say , and perform simple linear regression of on , than leaves a residual vector which is orthogonal to . Project the other predictors orthogonally to nd repeat the selection process. After k steps this results in a set of predictors that are then used in the usual way to construct a k-parameter linear model.

Forward stagewise

More cautious version of forwad selection-> take thousands tiny steps as it moves toward a final model.

Original motivation for the LARS algorithm.

LARS-Lasso-Stagewise connection is comceptually as well as computationally useful.

Model construction:

Predict response from covariates .

By location and scale transformations we always assume that the covariates have been standardized to have mean 0 and unit length, and that the response has mean 0.

Regression coefficients : gives prediction vector

Total squared error

norm for lasso

Quadratic programming techniques can be used to solve (5).though we will present an easier method here, closely related to the “homotopy method” of Osborne, Presnell and Turlach (2000a)."

Forward Stagewise Linear Regression.

Begins with ,builds up the regression function in successive small steps.

Let is the current Stagewise estimate, let be the vector of current correlations

is proportional to the correlation between covariate and current residual vector. Next step is taken in the direction of the greatest current correlation,

Need to mentioned here: is a "small" constant, "small" is important, otherwise "big" choice like leads to the standard forward selection technique. this could be over greedy.

The main point:

LARS is a stylized version of the stagewise procedure that uses a simple mathematical formula to accelerate the computations.

以下是重复论文的无聊描述,参考价值有限,但是因为内容已经高度概括化所以不方便删除,以下是用中文复述一遍主要思想。下午浪费半天一个原因就是因为没注意仔细 是y在上的投影,也就是说,如果我们只考虑 ,那么 就是目标的response. (而且在第一遍看的时候当初理解了这个事实!但是忘了orz)。

是目标response, 此时, 和 的correlation比 大,于是 朝 更新,然后呢,此时的residual 继续对和 找correlation. 慢慢找,直到如上图那个点,这时,correlation相等了,那么就停在这里,进行方向切换。而新的方向则是和 的角平分线方向。而LARS的计算过程可以通过一步直接跳到这个需要换方向的点,可以极大的降低计算消耗。(是否能跳过去这里存疑)

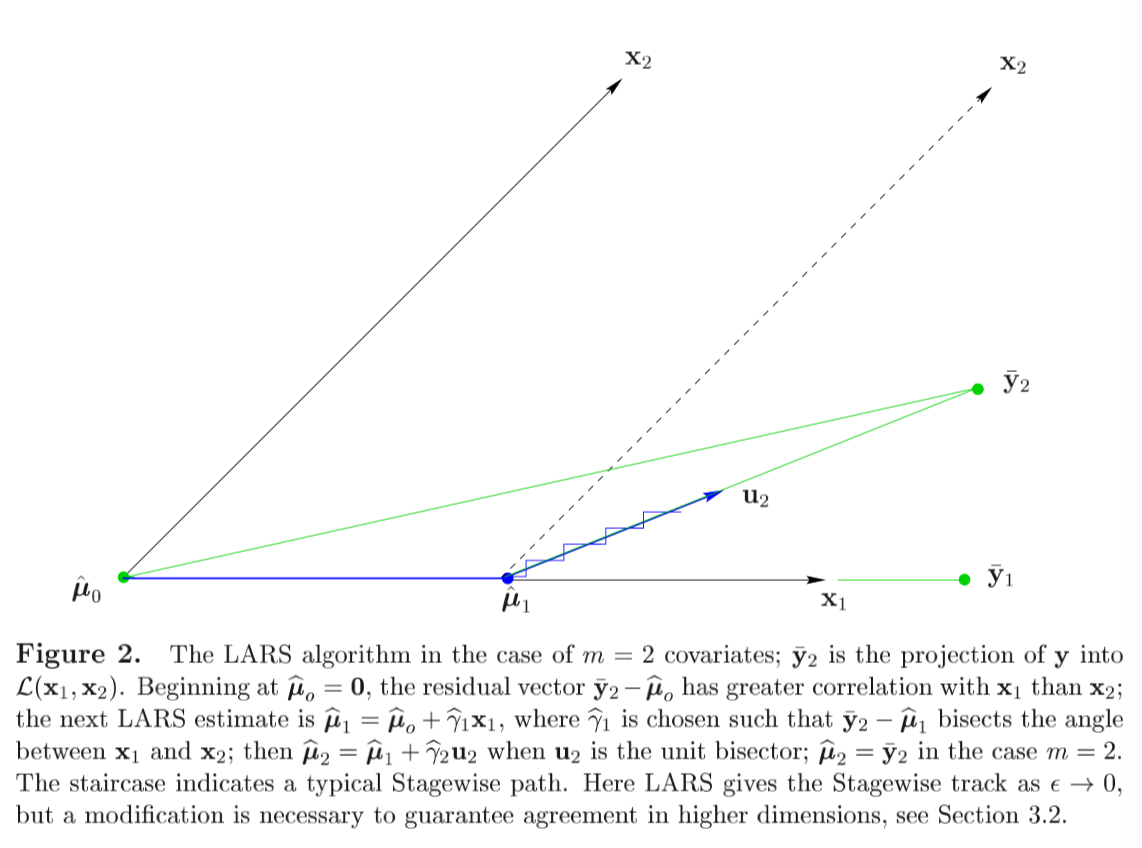

Each step adding one covariate to the model, after k steps just k of the 's are non-zero. The figure2 showed the m=2 covariates, . In this case the current correlation depend only on the projection of into the linear space spanned by and .

- The algorithm begins at , Figure 2 has making a smaller angle with than . In this case, the new correlation can be expressed as

Then, choose as the direction.

Stagewise would choose equal to some value , than repeat many times, or make larger enough to make equal , the projection of y into .

LARS uses an intermediate value of , the value that makes , equally correlated with and ; that is, bisects the angle between and , so .

be the unit vector lying along the bisector. The next LARS estimate is

With chosen to make in the case m=2. With covariates , would be smaller, leading to another change of direction.

LARS is motivated by the fact that it is easy to calculate the step size , short-circuiting the small Stagewise steps.

We assume that the covariate vector are linearly independent.

For a subset of the indices , define the matix

when the signs equal . Let

being a vector of 1's of length equaling the size of . The

is the unit vector making equal angles, less than , with the column of ,

We saw the previous part in a negative direction. First, we look the final part (12), which should be satisfied as the equal angular .

We need find a vector satisfied , now note that . That is , we have in the left hand side. so have a candidate , then what we need to do is just standardize it.

This is how the equal-angular vector is constructed.

So the problem becomes to a nother one, why the form of vector making equal angles?

That is, what is this formula mean?

Equal angular is equals to which angular????

That is, the between any subset and = . In this case,

is the vector about , then if the angular is equal, cos also should equal. However, projection to the direction in , then right hand side is the length of projection times 1. ( is a value rather a matrix.)

The describtion upon is so confusing!!!! need more clear idea about it!!!!

Then is the "fullly" describe about the LARS.

Start with and build up by steps.

Suppose current estimate is ,that , is the current correlations. is active set which indices corresponding covariates with the greatest absolute current correlations

Letting

we compute and we showed previous, the equal angle trisector and the inner product vector.

Then the next step of the LARS algorithm updates , say to

where

- 还是老样子从 开始,所以还是两件事,计算当前的correlation . 其中 是一个indices set包括了每一步最大相关系数。这里有点奇怪。特别是 的定义形式。

Emmmm,是不是可以这么解释,因为开始转向的时候,所以此时往的方向走并不会影响和 但是会影响。 所以等走走走, 又会减小减小。好像不太对,residual的变化。。。。如果按figure2的话,residual对 的角度一直在增大,角度增大导致correlation会减小,因为 的 在增大, 方向的解释越来越多,而同时,对 的角度一直在减小,也就是cos在增大,correlation在增大,一减一增两个过程直到这两个correlation相等

然后回到这个过程,也就是在找了一段时间之后,有记录: 。注意correlation的定义式: 是 , ,是此时的模型的已建模部分 的residual关于 X的 correlation。

按角平分线的思路,这时候在第 维的变化是主要的,维上应该都是角平分线所以是一致的?

但是 这样定义感觉很奇怪啊。 里面只包含了correlation是最大的那几个,也就是说一直走一直走,走到有新的correlation能加进来,那再改变方向,重新计算,否则就一直按这个方向走。比如说回到figure2的图例,在一开始,最大的correlation只有这个方向,走一小步, 方向的correlation还是小于 ,所以还是只有{1}。直到residual 的correlation到和 一致,那么就有了两个同时达到max的correlation,那就得重新计算 .

那这么理解就没问题了,于是下一步是通过这些东西计算步长.

- Back to paper understanding mode

Define:

for , so that the current correlation

for , around the equation (20), the definition of correlation, max correlation, direction , yield

也就是当前的j的correlation,是最大correlation减去步长乘以和投影向量的长度有关的一个玩意()。注意,这里 。也就和之前描述的,因为走的是角平分线,所以这帮已经active的variable同进退。

然后下一步该考虑的就是不在里的j。

For , the two formula upon shows that equals the maximal value at . Likewise , the current correlation for the reversed covariate , achieves maximality at .

Therefore in (24), is the smallest positive value of such that some new index joins the active set; is the smallest positive value of such that some new index joins the active set; is the minimizing index in (24) , the foot length of every j in . the new active set is , the new maximum absolute correlation is .

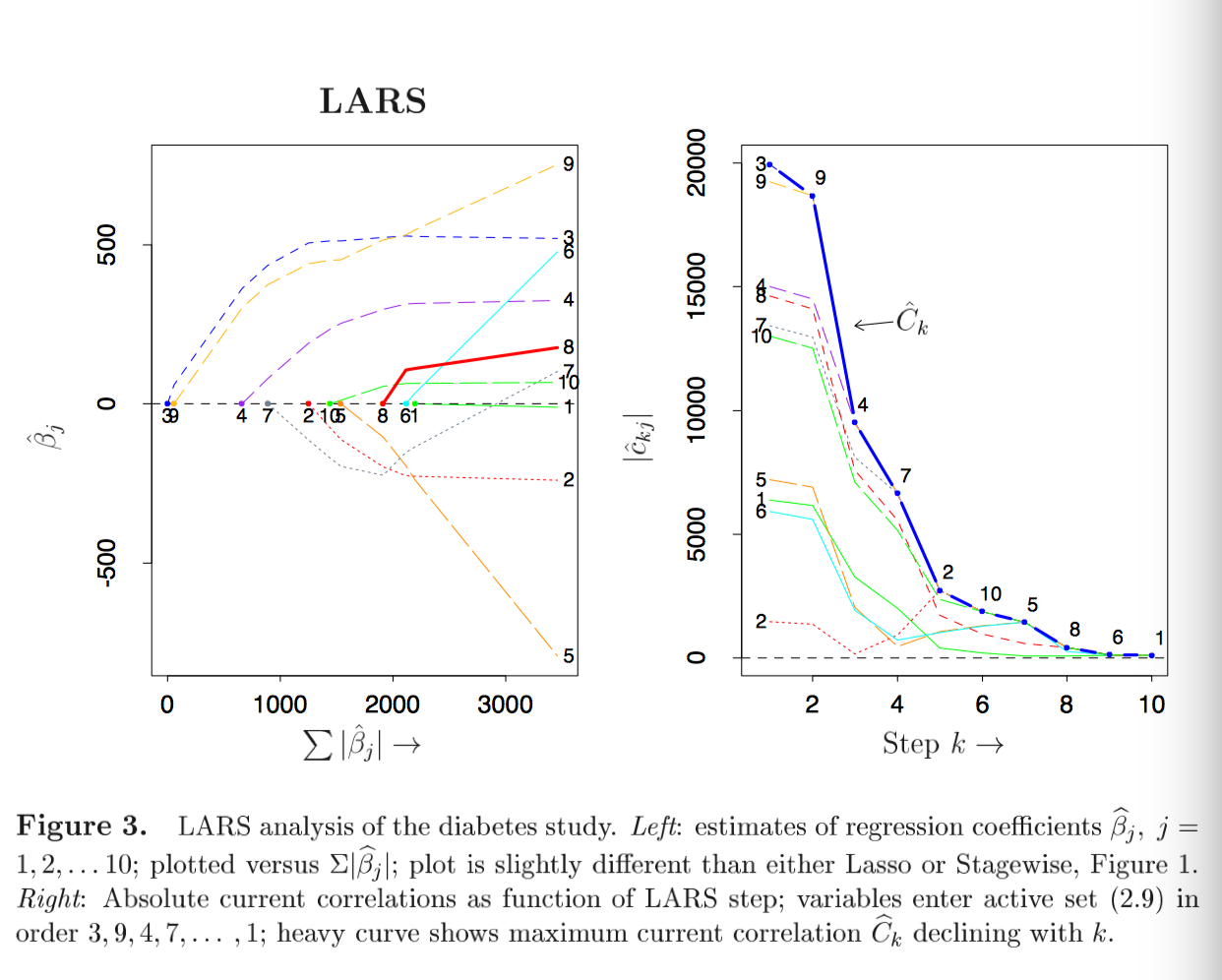

The figure 10 shows the LARS in diabetes data. 10 iterations for procedure from start to end. The join order or LARS is same as Lasso. However, tracks of are nearly but not exactly as either the LASSO or Stagewise tracks.

The right panel shows the absolute current correlation goes done with the LARS step k.

Declines with k.

Relation between LARS and OLS.

Suppose LARS has just completed step k-1, giving and is embarking upon step k.The active set will have k members, giving and . Similarly, let indicate the projection of y into , which, since , is

因为等角性质和Ak里面的东西在correlation上同进退,所以有

Since is a unit vector, (29) ,则有 有长度

和update的那个公式进行比较,则LARS的估计 在 到 的延长线上.

可以发现一个问题, 总是比 小,所以 LARS estimates always approaching but never reaching the OLS estimates .

有一个情况例外,如果LARS包含了所有的covariates,然后。。。。反正就和OLS等了。

因为一步到位的性质,LARS算起来特别快。

以上的计算都没有好好看,但是大概意思结论和过程都不复杂所以先放着吧。如果有用再拿起来看。

因为typero打了那么多字好卡,所以暂时把文本分割。